Multi Sensor Fusion Data For Autonomous Driving Enrico Schroeder Audi Ag

Multi Sensor Fusion Data For Autonomous Driving Enrico Schroeder Conference website: saiconference cvcenrico schroeder got his masters degree in computer science from freie universität berlin, focusing on artifi. For multi sensor fusion in object detection networks for autonomous driving enrico schr¨oder1(b),saschabraun1,mirkom¨ahlisch1, julien vitay2, and fred hamker2 1 department of development sensor data fusion and localisation, audi ag, 85045 ingolstadt, germany {enrico.schroeder,sascha alexander.braun,mirko.maehlisch}@audi.de.

Multi View Fusion Of Sensor Data For Improved Perception And Prediction In the context of autonomous driving we are especially interested in multi modal variations of these object detection networks, detecting objects using both camera and lidar sensors. research in this area has made significant progress since introduction of the 2d bird view and 3d object detection challenges to the automotive kitti dataset [ 3. Kitti highlighted the importance of multi modal sensor setups for autonomous driving, and the latest datasets have put a strong emphasis on this aspect. nuscenes [3] is a recently released dataset which is particularly notable for its sensor multimodality. it consists of camera images, lidar point clouds, and radar data, together with 3d. Doi: 10.1007 978 3 030 17798 0 12 corpus id: 182303919; feature map transformation for multi sensor fusion in object detection networks for autonomous driving @article{schrder2019featuremt, title={feature map transformation for multi sensor fusion in object detection networks for autonomous driving}, author={enrico schr{\"o}der and sascha braun and mirko m{\"a}hlisch and julien vitay and fred. The first comprehensive and systematic introduction to multi sensor fusion for autonomous driving. addresses the theory of deep multi sensor fusion from the perspective of uncertainty for both models and data. elaborates on the key applications of multi sensor fusion in various perception related tasks and hardware platforms. 5502 accesses.

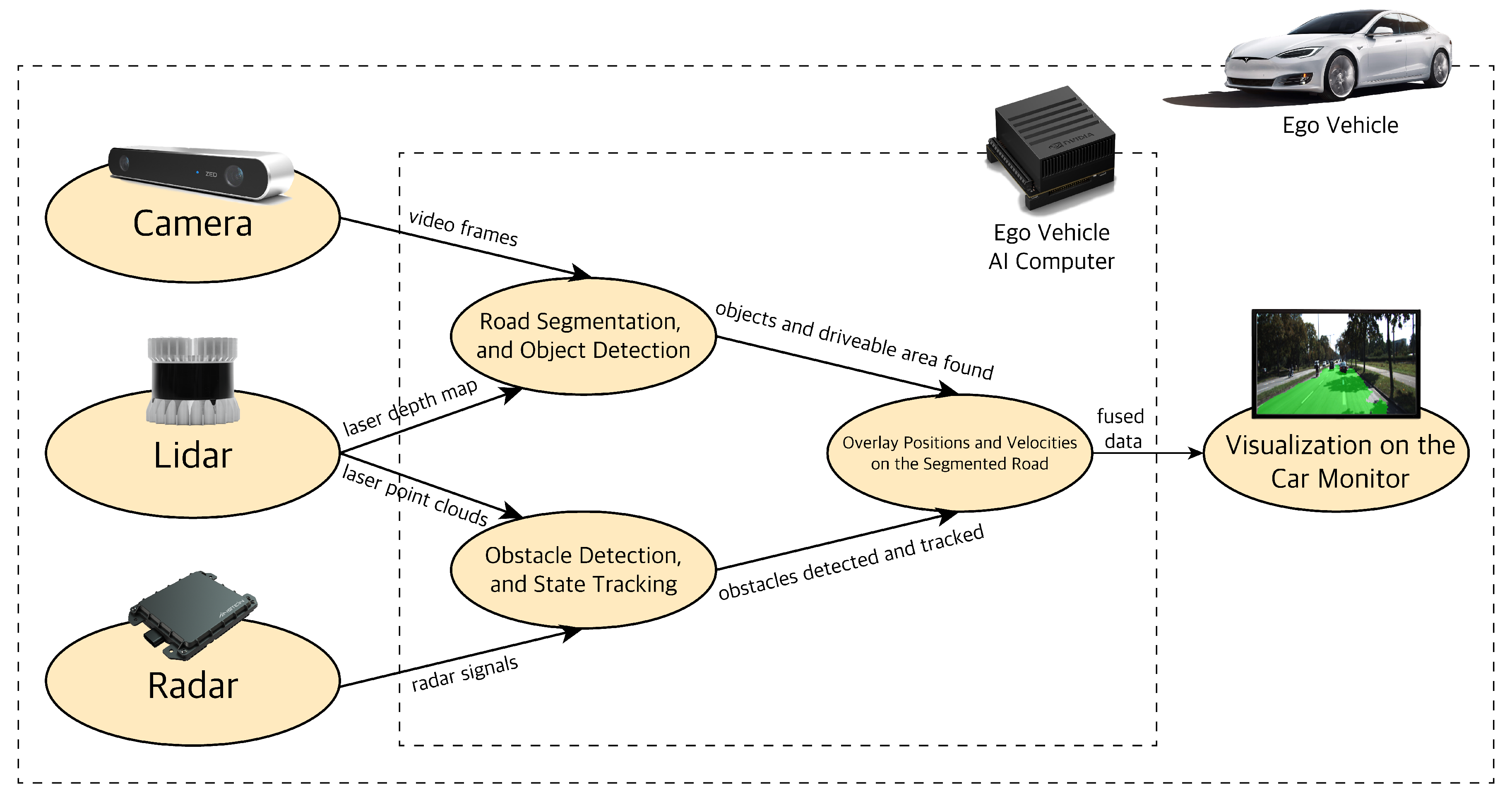

Ensuring Adas Safety With Multi Sensor Fusion Doi: 10.1007 978 3 030 17798 0 12 corpus id: 182303919; feature map transformation for multi sensor fusion in object detection networks for autonomous driving @article{schrder2019featuremt, title={feature map transformation for multi sensor fusion in object detection networks for autonomous driving}, author={enrico schr{\"o}der and sascha braun and mirko m{\"a}hlisch and julien vitay and fred. The first comprehensive and systematic introduction to multi sensor fusion for autonomous driving. addresses the theory of deep multi sensor fusion from the perspective of uncertainty for both models and data. elaborates on the key applications of multi sensor fusion in various perception related tasks and hardware platforms. 5502 accesses. This book reviews the multi sensor data fusion methods applied in autonomous driving, and the main body is divided into three parts: basic, method, and advance. starting from the mechanism of data fusion, it comprehensively reviews the development of automatic perception technology and data fusion technology, and gives a comprehensive overview. Sensor fusion technology is a critical component of autonomous vehicles, enabling them to perceive and respond to their environment with greater accuracy and speed. this technology integrates data from multiple sensors, such as lidar, radar, cameras, and gps, to create a comprehensive understanding of the vehicle’s surroundings. by combining and analyzing this data, sensor fusion technology.

Sensors Free Full Text Real Time Hybrid Multi Sensor Fusion This book reviews the multi sensor data fusion methods applied in autonomous driving, and the main body is divided into three parts: basic, method, and advance. starting from the mechanism of data fusion, it comprehensively reviews the development of automatic perception technology and data fusion technology, and gives a comprehensive overview. Sensor fusion technology is a critical component of autonomous vehicles, enabling them to perceive and respond to their environment with greater accuracy and speed. this technology integrates data from multiple sensors, such as lidar, radar, cameras, and gps, to create a comprehensive understanding of the vehicle’s surroundings. by combining and analyzing this data, sensor fusion technology.

Multi Sensor Data Fusion For Autonomous Ground Vehicle Information

Comments are closed.